Artificial Intelligence (AI) has made significant strides in the field of health diagnosis, offering tools that promise to enhance diagnostic accuracy, streamline workflows, and improve patient outcomes. AI systems, particularly those based on machine learning and deep learning, have demonstrated impressive capabilities in analyzing medical images, predicting disease risk, and supporting clinical decision-making. However, despite these advancements, there are several limitations to AI in health diagnosis that must be addressed to fully realize its potential and ensure its effective integration into clinical practice.

Data Quality and Quantity

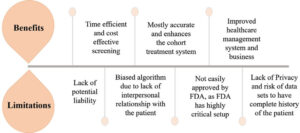

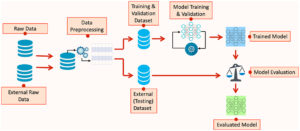

One of the primary limitations of AI in health diagnosis is the reliance on high-quality, large-scale datasets for training and validation. AI algorithms, particularly those using deep learning techniques, require vast amounts of data to achieve high accuracy and generalizability. However, obtaining such data can be challenging due to issues related to data availability, data privacy, and data quality.

Medical datasets are often fragmented, incomplete, or biased, which can negatively impact the performance of AI models. For instance, data collected from a particular demographic or geographic region may not be representative of the broader population, leading to biased or less accurate diagnostic predictions for underrepresented groups. Additionally, variations in data quality, such as inconsistent imaging protocols or incomplete patient records, can affect the reliability of AI systems. Ensuring that AI models are trained on diverse, high-quality datasets is crucial for improving their diagnostic accuracy and generalizability.

Algorithmic Bias and Fairness

Algorithmic bias is another significant limitation of AI in health diagnosis. AI systems learn from historical data, and if this data contains inherent biases—such as disparities in the representation of different patient groups—these biases can be perpetuated and even amplified by the AI algorithms. For example, if an AI model is trained predominantly on data from one racial or ethnic group, it may perform less well for individuals from other groups, potentially leading to disparities in diagnostic accuracy and healthcare outcomes.

Addressing algorithmic bias requires careful attention to the diversity of training datasets and ongoing monitoring of AI systems to identify and correct biases. Developers and healthcare providers must collaborate to ensure that AI models are evaluated for fairness and that any disparities are addressed to provide equitable diagnostic support for all patients.

Transparency and Explainability

The “black box” nature of many AI models poses a challenge for transparency and explainability in health diagnosis. AI systems, especially those based on complex deep learning architectures, often provide predictions or recommendations without offering clear explanations of how these conclusions were reached. This lack of transparency can undermine trust in AI systems and complicate the ability of healthcare professionals to understand and validate AI-driven diagnostic suggestions.

To address this issue, there is a growing emphasis on developing explainable AI (XAI) models that provide insights into the decision-making processes of AI systems. Explainable AI aims to make the inner workings of AI models more transparent and understandable, allowing clinicians to interpret and validate the recommendations made by these systems. Enhancing transparency and explainability is crucial for fostering trust in AI technologies and ensuring their effective integration into clinical practice.

Integration with Clinical Workflow

Integrating AI systems into existing clinical workflows presents another challenge. AI tools must seamlessly fit into the day-to-day practices of healthcare professionals without causing disruption or requiring significant changes to established procedures. This integration involves not only technical considerations but also training and adaptation for healthcare providers.

AI systems must be designed with usability in mind, ensuring that they complement rather than complicate clinical workflows. Additionally, healthcare professionals need to be trained to effectively use AI tools and interpret their outputs. Collaboration between AI developers, healthcare providers, and administrators is essential to ensure that AI systems are integrated smoothly and effectively into clinical environments.

Ethical and Regulatory Concerns

Ethical and regulatory concerns represent significant limitations for the deployment of AI in health diagnosis. Issues related to patient privacy, consent, and the accountability of AI systems must be carefully managed to ensure the responsible use of AI technologies. For example, the use of patient data for training AI models raises questions about data privacy and consent, as patients need to be informed about how their data will be used and have the ability to opt out if desired.

Furthermore, the regulatory landscape for AI in healthcare is still evolving. Ensuring that AI systems meet established standards for safety, efficacy, and reliability is crucial for gaining regulatory approval and ensuring that these systems are used responsibly. Policymakers and regulatory bodies must work to develop and enforce guidelines that address the unique challenges posed by AI technologies in healthcare.

Limitations in Generalization

AI models may also face limitations in generalizing across different clinical settings or patient populations. An AI system trained in one healthcare environment may not perform as well in another due to differences in data collection methods, clinical practices, or patient demographics. This limitation highlights the importance of validating AI models in diverse settings to ensure their robustness and applicability across various healthcare contexts.

Continuous evaluation and adaptation of AI systems are necessary to maintain their relevance and effectiveness as healthcare practices and patient populations evolve. AI models should be regularly updated and tested to ensure that they remain accurate and applicable in a wide range of clinical scenarios.

Conclusion

While AI holds great promise for enhancing health diagnosis, several limitations must be addressed to fully realize its potential. Issues related to data quality and quantity, algorithmic bias, transparency, integration with clinical workflows, ethical and regulatory concerns, and generalization challenges highlight the need for ongoing research, development, and collaboration in the field of AI in healthcare. By addressing these limitations, the healthcare industry can better harness the power of AI to improve diagnostic accuracy, patient outcomes, and overall healthcare delivery.